|

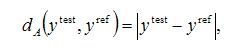

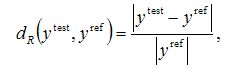

Comparing reference and test results A key question that arises in the use of a software measurement standard described in this report concerns the comparison of the test result delivered by the software under test with the reference result provided with a Type F1 reference dataset or by Type F2 reference software. The comparison should be objective and reflect the requirements of the application in which the software under test is to be used. The result of the comparison is the means by which a decision is made about the fitness-for-purpose of the software under test. If ytest and yref denote, respectively, the test and reference results, then

are metrics for the numerical correctness of the test result that measure, respectively, the absolute and relative differences between the test and reference results. It is unnecessary (and perhaps unreasonable) to expect that the absolute difference between the test and reference results is comparable to the computational precision of the arithmetic used to deliver the test result. (For 16-digit arithmetic, for example, the computational precision is of order 10-16.) If the developer of the software has made a claim about the numerical correctness of the results returned by the software, then this can be used as the basis for setting a tolerance against which to compare the calculated value of the absolute difference. If the user of the software has documented a requirement on the numerical correctness of the result, then this can also be used as a basis of the comparison. If the uncertainty associated with the test result is available (evaluated in terms of the uncertainties associated with the measured data defining the surface profile), then it may be sufficient to require that the calculated value of the absolute difference is smaller (by several orders of magnitude, say) than this uncertainty. In this case, we can conclude that any inaccuracy in the result arising from the test software is small compared with inexactness in the result arising from uncertainties associated with the measurement data. It is necessary to take care with the interpretation of the result of a comparison between a test result and a corresponding reference result. The result of the comparison applies only to the software measurement standard used to obtain the comparison result. A similar comparison result may be obtained for a similar software measurement standard. However, very different software measurement standards may yield very different comparison results. It is recommended that the user considers a range of software measurement standards that are representative, and cover a wide range of instances, of the application in which the software under test is to be applied. |